1. Paper Information

1.1. Authors

Rayson Laroca, Luiz A. Zanlorensi, Gabriel R. Gonçalves, Eduardo Todt, William Robson Schwartz, David Menotti.

1.2. Abstract

This paper presents an efficient and layout-independent Automatic License Plate Recognition (ALPR) system based on the state-of-the-art YOLO object detector that contains a unified approach for license plate (LP) detection and layout classification to improve the recognition results using post-processing rules. The system is conceived by evaluating and optimizing different models, aiming at achieving the best speed/accuracy trade-off at each stage. The networks are trained using images from several datasets, with the addition of various data augmentation techniques, so that they are robust under different conditions. The proposed system achieved an average end-to-end recognition rate of 96.9% across eight public datasets (from five different regions) used in the experiments, outperforming both previous works and commercial systems in the ChineseLP, OpenALPR-EU, SSIG-SegPlate and UFPR-ALPR datasets. In the other datasets, the proposed approach achieved competitive results to those attained by the baselines. Our system also achieved impressive frames per second (FPS) rates on a high-end GPU, being able to perform in real time even when there are four vehicles in the scene. An additional contribution is that we manually labeled 38,351 bounding boxes on 6,239 images from public datasets and made the annotations publicly available to the research community.

1.3. Citation

If you use our trained models or the annotations provided by us in your research, please cite our paper:

- R. Laroca, L. A. Zanlorensi, G. R. Gonçalves, E. Todt, W. R. Schwartz, D. Menotti, “An Efficient and Layout-Independent Automatic License Plate Recognition System Based on the YOLO Detector,” IET Intelligent Transport Systems, vol. 15, no. 4, pp. 483-503, 2021. [Wiley] [PDF] [BibTeX]

You may also be interested in the conference version of this paper, where we introduced the UFPR-ALPR dataset:

- R. Laroca, E. Severo, L. A. Zanlorensi, L. S. Oliveira, G. R. Gonçalves, W. R. Schwartz, D. Menotti, “A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector,” in International Joint Conference on Neural Networks (IJCNN), July 2018, pp. 1–10. [Webpage] [IEEE Xplore] [PDF] [BibTeX] [Presentation] [NVIDIA News Center] [Video Demonstration]

2. Downloads

2.1. Proposed ALPR System

The Darknet framework was employed to train and test our networks. However, we used AlexeyAB’s version of Darknet, which has several improvements over the original, including improved neural network performance by merging two layers into one (convolutional and batch normalization), optimized memory allocation during network resizing, and many other code fixes.

The architectures and weights can be downloaded below:

- Vehicle Detection: network descriptor, data descriptor, weights, classes

- LP Detection and Layout Classification: network descriptor, data descriptor, weights, classes

- LP Recognition: network descriptor, data descriptor, weights, classes

2.2. Annotations

We manually annotated the position of the vehicles, LPs and characters, as well as their classes, in each image of the public datasets used in this work that have no annotations or contain labels only for part of the ALPR pipeline. Specifically, we manually labeled 38,351 bounding boxes on 6,239 images. The data available for download in this subsection consists only of annotations, as the images used to train/evaluate our networks are from public datasets not owned by us or that contain license agreements.

Before you can download the annotations, we kindly ask you to register by sending an e-mail with the following subject: “ALPR Annotations” to the first author (rblsantos@inf.ufpr.br), so that we can know who is using the provided data and notify you of future updates. Please include your name, affiliation and department in the e-mail. Once you have registered, you will receive a link to download the database. In general, a download link will take 1-3 workdays to issue.

The list of all images not used in our experiments is provided along with the annotations.

3. Running (Linux)

For the following commands, we assume that you have AlexeyAB’s version of the Darknet framework correctly compiled. Remember that we achieved real-time using an AMD Ryzen Threadripper 1920X 3.5GHz CPU, 32 GB of RAM, and an NVIDIA Titan Xp GPU.

3.1. Setting up the environment

Go to your Darknet framework folder and download the weights and configuration files (data/network descriptors and class names) for the networks. You can use the following commands (or click here):

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/vehicle-detection.cfg

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/vehicle-detection.data

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/vehicle-detection.weights

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/vehicle-detection.names

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-detection-layout-classification.cfg

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-detection-layout-classification.data

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-detection-layout-classification.weights

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-detection-layout-classification.names

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-recognition.cfg

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-recognition.data

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-recognition.weights

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/lp-recognition.names

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/sample-image.jpg

wget http://www.inf.ufpr.br/vri/databases/layout-independent-alpr/data/README.txt

3.2. Vehicle Detection

The first stage in our approach is vehicle detection using a model based on YOLOv2 (all modifications made by us to this model are described in the paper). Thus, considering an example image (sample-image.jpg), use the following command:

./darknet detector test vehicle-detection.data vehicle-detection.cfg vehicle-detection.weights -thresh .25 <<< sample-image.jpg

As a result, you should see an image like the one below.

3.3. License Plate Detection and Layout Classification

Once we have the vehicle patches, you must crop them and feed each into the modified Fast-YOLOv2 network. Assuming that we crop the car and motorcycle patches and named them as car.jpg and motorcycle.jpg, respectively, use the following commands:

./darknet detector test lp-detection-layout-classification.data lp-detection-layout-classification.cfg lp-detection-layout-classification.weights -thresh .01 <<< motorcycle.jpg

./darknet detector test lp-detection-layout-classification.data lp-detection-layout-classification.cfg lp-detection-layout-classification.weights -thresh .01 <<< car.jpg

Considering only the detection with the highest confidence value in each image, the results should be:

3.4. License Plate Recognition

Finally, on each vehicle patch, we need to crop the bounding box of the license plate found in the previous stage (enlarging it so that they have aspect ratios (w / h) between 2.5 and 3.0). Suppose the new image files are named lp-car.jpg and lp-motorcycle.jpg, we need to forward them into the CR-NET network:

./darknet detector test lp-recognition.data lp-recognition.cfg lp-recognition.weights -thresh .5 <<< lp-motorcycle.jpg

./darknet detector test lp-recognition.data lp-recognition.cfg lp-recognition.weights -thresh .5 <<< lp-car.jpg

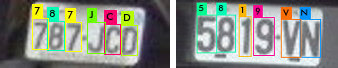

Afterward, you should see the images below:

As can be seen, in both cases, all license plate characters were correctly recognized.

We also designed heuristic rules to adapt the results produced by CR-NET according to the predicted layout class (see our paper for more details). For example, based on the datasets employed in our work, we consider that Taiwanese license plates have between 5 and 6 characters. Thus, no changes were performed on the predictions shown above, as they already fit the heuristics rules.

4. Contact

A list of all papers on ALPR published by us can be seen here.

5. Contact

Please contact the first author (Rayson Laroca) with questions or comments.