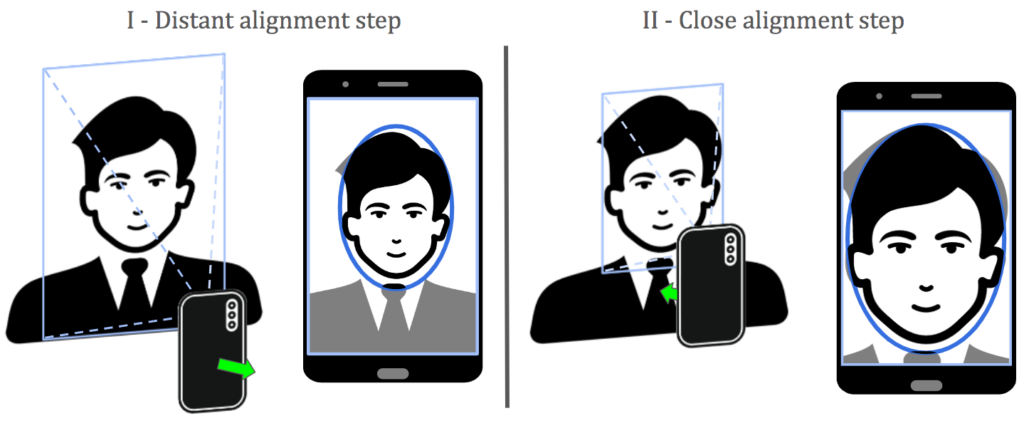

The UFPR-Close-Up dataset has videos with the active interaction named close-up movement, composed of two steps. In the initial phase, the user must move away from the device to position their face within a small area highlighted on the screen. Once properly aligned and held in place for at least one second, the second phase is initiated. In this phase, the user is prompted to position their face within a larger area displayed on the screen, requiring them to move closer to the device to align their face again and hold the position for one second. The face detector continuously monitors the face’s position; if the alignment is lost, the app reverts to the previous step, requiring the user to realign their face within the indicated area.

The dataset has 2,561 videos, where 714 of them are genuine samples from volunteer subjects and 1,847 are spoof samples made using selected face images of the CelebA dataset and CelebV videos, displayed in several presentation attack instruments, covering the most common categories of attacks. It has been introduced in our paper [PDF].

Live samples were captured by the participant with a minimum interval of 12 hours between sessions and using their own smartphone through a mobile application (app) developed by us. Whilst spoof samples were recorded using devices belonging to the Android family (Xiaomi Redmi Note 13, Moto G54, Samsung Galaxy S22FE, Samsung Galaxy S23, Samsung Galaxy A54, Samsung Galaxy A34) and to the iOS family (iPhone 8, iPhone 12, iPhone 14) with a modified version of the app that allows label the spoof attack type and used instrument while recording samples.

In total, the dataset has 382 different live subjects and 1043 spoof targets. Spoof targets were selected based on gender and pose (yaw and pitch angles) distributions of live samples. Spoof samples are composed of four different presentation attacks: Photo, display, Replay, Scaling and Mask. Each with two different presentation attack instrument.

Four different protocols were proposed to evaluate the robustness of active presentation attack detectors in challenging scenarios, each with a different small domain-shift such as against under unseen presentation attack instrument, unknown presnetation attacks and different camera noises.

Infos about videos’ distributions by presentation attack, protocol details and benchmark are in our paper.

How to obtain the Dataset

Due to private financial support for the creation of this dataset and in compliance with data protection agreements, all spoof samples are currently available, but live samples will be released starting in April 2027

The UFPR-Close-Up dataset is released only to academic researchers from educational or research institutes for non-commercial purposes.

To be able to download the dataset, please read carefully this license agreement, fill it out and send it back to Professor David Menotti (menotti@inf.ufpr.br). The license agreement MUST be reviewed and signed by the individual or entity authorized to make legal commitments on behalf of the institution or corporation (e.g., Department/Administrative Head, or similar). We cannot accept licenses signed by students or faculty members.

References

If you use the UFPR-Close-Up dataset in your research please cite our paper:

- BibTeX

Contact

Please contact Bruno H. Kamarowski (bhkc18@inf.ufpr.br) with questions or comments.